Introduction: Vector space – Serlo

We already know the vector spaces and from school. There we got to know them in the form coordinate systems. The concept of a vector space is much broader in mathematics. In the following, we will develop the abstract mathematical concept of vector space starting from the vector spaces known from school. They have a wide application in science, technology and data analysis.

The vector space [Bearbeiten]

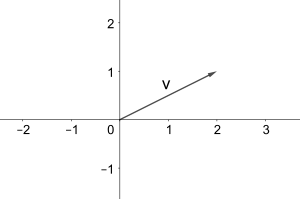

In and we know vectors in the form of points in the plane or in the space. Sometimes we also encounter arrows as representatives of vectors in the coordinate system. vectors can be described in by two and in by three coordinates. The following map shows for example the arrow representation of the vector :

Often, however, three coordinates are not enough to represent all the desired information. This is shown by the following two examples:

Example (Radio probe)

Suppose, we send off a radio probe (balloon with measurement device), in order to investigate the earth's atmosphere. Beside the position of the probe (three data points) we record different measured data, namely temperature and air pressure. The three coordinates of the are already needed to represent the position of the probe. For the representation of the other measured values we need two more coordinates. We assume that the probe is located 20 m in eastern direction, 30 m in northern direction and in a height of 15 m starting from the measuring station. Our instruments in the radio probe show at this time a temperature of 13° C and an air pressure of 1 bar. To write down all recorded data at once, we write down the row vector . Here, the superscript T (transposed) allows the space-saving notation as a row vector. The notation as column vector is

Thus we are in instead of , because we need five instead of three numbers to describe the vector.

Example (Stocks)

We consider the stock values of 30 companies at a certain point of time. We can record those in a vector with 30 entries, where each entry stands for the value of the respective share at the specific point in time. We get a vector in that gives the current state of the financial market. We can extend the 30 values further by including other stocks. Ultimately, then, we can choose any natural number as the dimension of our "stock vector". The current state of a stock market can thus be encoded by a vector of the with entries.

We have seen from the examples that it can be useful to extend by adding more dimensions to a general vector space . And there are many more examples! In the transition from to we can still vividly imagine that we increase the dimension by adding an independent direction. In higher dimensions we lack this geometric notion. However, we can imagine higher dimensional vector spaces very well in the tuple notation. An additional dimension can be achieved by adding another number. These numbers can all be chosen independently and we call them coordinates.

Generalization to [Bearbeiten]

So far we have created vector spaces by adding further dimensions to . Now we want to look at which properties of the real numbers are relevant for this and, based on this, generalize the vector space notion further. We are familiar with the rules of . We already know the vector addition and the scalar multiplication in and in and we can visualize these vividly.

-

Addition of two vectors im

-

scalar multiplication im

In the same way, however, we can also calculate in higher dimensions. Thus the sum of the vectors and is just given by summing up the entries:

The scalar multiplication of with some is done by multiplying all entries separately:

Just as in we can proceed in general also in . Let us now consider which properties of guarantee that a computation with vectors in is possible. We see from above examples that scalar multiplication and addition of vectors in each component corresponds to multiplication and of addition in , respectively. Thus we compute in the first component of addition . Likewise we have that for scalar multiplication in the third component .

So the arithmetic in is traced back to the addition and multiplication in . Here we have another possibility for abstraction. A set in which one can add and multiply as in the real numbers is called a field (and is such a field). So it should be sufficient if the numbers of the vector tuple come from a field. Thus we can form a vector space from every general field . So it also works for other fields like the rational numbers or the complex numbers . Analogous to the we start with the field and build up a vector space by adding further "independent directions".

Example (The vector space )

The vector space is like the a set of tuples , only that the entries are exclusively rational numbers from and not real numbers from . We have hence that . Thus and are vectors from . In contrast is not a vector from , because in the second component with there is a non-rational (or also called irrational) number in the tuple.

Relation to polynomials [Bearbeiten]

Above we used vectors of in tuple notation to describe systems with units of information. We find the structure of computing with tuples elsewhere as well. Consider the polynomial of degree 2 (a quadratic polynomial), given by . We always sort the summands such that the exponents are ordered descending from the degree of the polynomial 2 to . In doing so, we note that this polynomial has similarities to the vector . Here the first coefficient of the polynomial is in the first component of the vector and so on. Basically, the vector encodes the polynomial.

We can observe the same similarity between addition and scalar multiplication of polynomials on the one hand and the associated operations of vectors on the other. Let us take the polynomials with and with and the scalar . We can write the polynomials as tuples:

Now we calculate in both forms of representation:

Also the multiplication of with the factor corresponds with the respective calculation in the associated vector tuples:

Every second degree polynomial can be uniquely represented by a three-dimensional vector in the way described. Conversely, every three-dimensional vector uniquely describes a second-degree polynomial. Thus we find a bijective map between the set of second degree polynomials and the . Similarly, there exists a bijective map between third degree polynomials and the and in general between polynomials of -th degree and the .

So far we have allowed as coefficients for polynomials all real numbers. We can also consider polynomials whose coefficients are elements of . Accordingly, the entries of the corresponding vector are rational numbers. polynomials -th degrees with rational coefficients thus correspond to vectors from the vector space . Actually, instead of or , any field is allowed.

General vector spaces in mathematics[Bearbeiten]

We have found that we can calculate with polynomials of degree in the same way as with vectors of . Thus, the set of polynomials of degree has a similar structure compared to . However, when considering all polynomials, that is, polynomials of any degree, we reach our limits with the notion of the . In this set the polynomials can have arbitrary large exponents:

To describe this set by tuples, we need infinitely many entries. The space of all polynomials includes infinitely many dimensions, while in we are limited to dimensions. Thus the set of all polynomials cannot be expressed by a set . Nevertheless, polynomials and tuples have a common structure, as we have already seen. This allows a further step of abstraction: by summarizing this common structure in a definition, we can talk about tuples as well as polynomials and about other sets with these structures.

What is this common structure? The commonality of polynomials and of tuples is that they can be added and scaled and that both operations behave similarly on both sets. This is the common structure that vector spaces have: vectors are objects that can be added and scaled.

We have noted a structural difference between the and the vector space of all polynomials. However, they have in common that their elements can be added and scaled. Thus it seems obvious to consider this property of vectors as the defining property of an every vector space.

Up to now we have not considered which calculation rules apply to the addition and scalar multiplication of vectors in general vector spaces. In we have the associative and commutative law as well as the distributive law and we know neutral and inverse elements concerning addition and multiplication. As we have seen above, arithmetic in can be traced back to arithmetic in . Accordingly certain calculation rules of the real numbers transfer to the vector space and analogously of every field to the .

Deriving the definition of a vector space[Bearbeiten]

The addition, scalar multiplication and all associated arithmetic laws provide the formal definition of the vector space. The starting point of our description of a vector space is a set containing all vectors of a vector space. In order for our vector space to contain at least one vector, we require that has to be non-empty. We have seen that the essential structure of a vector space is given by the arithmetic operations performed on it. So we need to formally describe addition and scalar multiplication on a vector space.

The additive structure of a vector space[Bearbeiten]

We have already required that a vector space should be a non-empty set. Now we define via axioms what properties its additive structure must have. First, we note that an addition of vectors is an inner operation [1] . So it is a map where two vectors are mapped to another vector. The function value is the sum of the two input vectors.

We denote this map with the symbol . So is the sum of the two vectors and . The notation is analogous to the notation , where instead of "" we write the symbol "". Instead of the notation the so-called infix notation is usually used, which we want to use in the following.

We use here the operation sign "" to better distinguish between the vector addition and of addition of numbers "", which we can first consider independently. In most textbooks, the symbol "" is also used for vector addition. Whether the addition of vectors or of numbers is meant, must be inferred from the respective context.For convenience, we will also later use the symbol "" instead of "".

To show that the set is provided with an operation "", we write . However, in order for us to consider "" as an addition, this operation must satisfy certain characteristic properties that we already know from the addition of numbers. These are:

-

is complete with respect to . That means, the sum of two vectors again yields a well-defined vector:

-

Die vector addition is commutative ( satisfies the commutative law):

-

Die vector addition is associative ( satisfies the associative law):

-

The vector addition has a neutral element. This means that there is at least one vector for which

Later we will show that it already follows from the other axioms that every vector space has exactly one neutral element. This neutral element is called the zero vector. For the zero vector from the vector space we write "". If it is clear which vector space the zero vector comes from, then we write down "".

-

For every vector there exists at least one additive inverse element . For the vector inverse to we have that:

This means that the addition of every vector with its (additive) inverse must yield the neutral element or, in other words, the zero vector. We will show later that the inverse vector is unique. So for every vector there is exactly one inverse vector to it. We call this vector inverse or negative to and usually write "" for it.

A set with an operation satisfying the above five axioms is also called an abelian group [2].

The scalar multiplication[Bearbeiten]

We have already defined which properties the addition of vectors must fulfil. The scalar multiplication of vectors is still missing. So that we can distinguish the scalar multiplication of the normal number multiplication, we use for it first the symbol "". In textbooks the symbol "" is used instead of "" or the dot is even omitted completely. Which operation is meant then, results from the context. We will use this notation later. The scalar multiplication maps a number (scaling factor) and a vector to another vector.

The notation means that is stretched (or compressed) by . It is obvious to define the scalar by . However, we can still generalize this. All sets, in which one can add and multiply similarly to the real numbers, come into question as basic set for scaling factors. Such a set is called a field (missing) in mathematics.

The properties of scalar multiplication "" are similar to those of multiplication of numbers. We now want to define scalar multiplication formally by axioms. As with of addition, a non-empty set is the starting point of the definition. In addition, we need a field . The scalar multiplication is an outer operation satisfying the following properties:

-

scalar distributive law:

-

vectorial distributive law:

-

associative law for scalars:

-

Let be the neutral element of the multiplication in the field . Then, is also the neutral element of scalar multiplication:

In order to be able to scale vectors, we also need a field in the definition of a vector space. This field contains the scaling factors. Therefore, vector spaces are always defined over a field . We say " is a vector space over " or briefly " is a -vector space" to express that the scaling factors for come from .

Definition of a vector space[Bearbeiten]

We can write down our considerations in a compressed way to get the formal definition of a vector space:

Definition (vector space)

Let be a non-empty set with an inner operation (of vector addition) and an outer operation (of scalar multiplication). The set with these two operations is called vector space over the field or alternatively -vector space if the following axioms hold:

-

together with the operation forms an abelian group (missing). That is, the following axioms are satisfied:

- associative law: For all we have that: .

- commutative law: For all we have that: .

- Existence of a neutral element: There is an element such that for all we have that: . This vector is called neutral element of addition or zero vector.

- Existence of an inverse element: To every there exists an element such that we have . The element is called inverse element to . Instead of we also write .

-

In addition, the following axioms of scalar multiplication must be satisfied:

- Scalar distributive law: For all and all we have that: .

- Vector distributive law: For all and all we have that:

- Associative law for scalars: For all and all it holds that: .

- Neutral element of scalar multiplication: For all and for (the neutral element of multiplication in ) we have that: . The 1 is called neutral element of scalar multiplication.

Instead of "" one often writes "". The last notation makes clear that the set includes the operations and .

Hint

We use the symbols "" and "" to distinguish them from addition "" and multiplication "". In the literature this distinction is often not made and from the context it becomes clear whether for example "" means an addition of numbers or of vectors.