Epsilon-delta definition of continuity – Serlo

Among the sequence criteria, the epsilon-delta criterion is another way to define the continuity of functions. This criterion describes the feature of continuous functions, that sufficiently small changes of the argument cause arbitrarily small changes of the function value.

Motivation

[Bearbeiten]In the beginning of this chapter, we learned that continuity of a function may - by a simple intuition - be considered as an absence of jumps. So if we are at an argument where continuity holds, the function values will change arbitrarily little, when we wiggle around the argument by a sufficiently small amount. So , for in the vicinity of . The function values may therefore be useful to approximate .

Continuity when approximating function values

[Bearbeiten]If a function has no jumps, we may approximate its function values by other nearby values . For this approximation, and hence also for proofs of continuity, we will use the epsilon-delta criterion for continuity. So how will such an approximation look in a practical situation?

Suppose, we make an experiment that includes measuring the air temperature as a function of time. Let be the function describing the temperature. So is the temperature at time . Now, suppose there is a technical problem, so we have no data for - or we simply did not measure at exactly this point of time. However, we would like to approximate the function value as precisely as we can:

Suppose, a technical issue prevented the measurement of . Since the temperature changes continuously in time - and especially there is no jump at - we may instead use a temperature value measured at a time close to . So, let us approximate the value by taking a temperature with close to . That means, is an approximation for . How close must come to in order to obtain a given approximation precision?

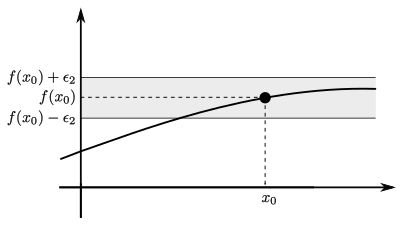

Suppose that for the evaluation of the temperature at a later time , the maximal error shall be . So considering the following figure, the measured temperature should be in the grey region . Those are all temperatures with function values between and , i.e. inside the open interval :

In this graphic, we may see that there is a region around , where function values differ by less than from . So in fact, there is a time difference , such that all function values are inside the interval highlighted in grey:

Therefore, we may indeed approximate the missing data point sufficiently well (meaning with a maximal error of ) . This is done by taking a time differing from by less than and then, the error of in approximating will be smaller than the desired maximal error . So will be the approximation for .

Conclusion: There is a , such that the difference is smaller than for all smaller than . I.e.

Increasing approximation precision

[Bearbeiten]What will happen, if we need to know the temperature value to a higher precision due to increased requirements in the evaluation of the experiment? For instance, if the required maximal temperature error is set to instead of ?

In that case, thare is an interval around , where function values do not deviate by more than from . Mathematically speaking, there a exists, such that differs by a maximum amount of from , if there is :

No matter how small we choose , thanks to the continuous temperature dependence, we may always find a , such that differs at most by from , whenever is closer to than . We keep in mind:

No matter which maximal error is required, there is always an interval around , which is with size , where all approximated function values deviate by less than from the function value to be approximated.

This holds true , since the function does not have a jump at . In other words, since is continuous at . Even beyond that, we may always infer from the above characteristic that there is no jump in the graph of at . Therefore, we may use it as a formal definition for continuity. As mathematicians frequently use the variables and when describing this characteristic, it is also called epsilon-delta-criterion for continuity.

Epsilon-delta-criterion for continuity

[Bearbeiten]Why does the epsilon-delta-criterion hold if and only if the graph of the function does not have a jump at some argument (i.e. it is continuous there)? The temperature example allows us to intuitively verify, that the epsilon-delta-criterion is satisfied for continuous functions. But will the epsilon-delta-criterion be violated, when a function has a jump at some argument? To answer this question, let us assume that the temperature as a function of time has a jump at some :

Let be a given maximal error that is smaller than the jump:

In that case, we may not choose a -interval around , where all function values have a deviation lower than from . If we, for instance, choose the following , then there certainly is an between and with a function value differing by more than from :

When choosing a smaller , we will find an with , as well:

No matter how small we choose , there will always be an argument with a distance of less than to , such that the function value differs by more than from . So we have seen that in an intuitive example, the epsilon-delta-criterion is not satisfied, if the function has a jump. Therefore, the epsilon-delta-criterion characterizes whether the graph of the function has a jump at the considered argument or not. That means, we may consider it as a definition of continuity. Since this criterion only uses mathematically well-defined terms, it may be used not just as an intuitive, but also as a formal definition.

Definition

[Bearbeiten]Epsilon-Delta criterion for continuity

[Bearbeiten]The - definition of continuity at an argument inside the domain of definition is the following:

Definition (Epsilon-Delta-definition of continuity)

A function with is continuous at , if and only if for any there is a , such that holds for all with . Written in mathematical symbols, that means is continuous at if and only if

Explanation of the quantifier notation:

The above definition describes continuity at a certain point (argument). An entire function is called continuous, when it is continuous - according to the epsilon-delta criterion - at each of its arguments in the domain of definition.

Derivation of the Epsilon-Delta criterion for discontinuity

[Bearbeiten]We may also obtain a criterion of discontinuity by simply negating the above definition. Negating mathematical propositions has already been treated in chapter „Aussagen negieren“ . While doing so, an all quantifier gets transformed into an existential quantifier and vice versa. Concerning inner implication, we have to keep in mind that the negation of is equivalent to . Negating the epsilon-delta criterion of discontinuity, we obtain:

This gets us the negation of continuity (i.e. discontinuity):

Epsilon-Delta criterion for discontinuity

[Bearbeiten]Definition (Epsilon-Delta definition of discontinuity)

A function with is discontinuous at , if and only if there is an , such that for all a with and exists. Mathematically written, is discontinuous at iff

Explanation of the quantifier notation:

Further explanations considering the Epsilon-Delta criterion

[Bearbeiten]The inequality means that the distance between and is smaller than . Analogously, tells us that the distance between and is smaller than . Therefor, the implication just says that whenever and are closer together than , then we know that the distance between and before applying the function must have been smaller than . Thus we may interpret the epsilon-delta criterion in the following way:

No matter how small we set the maximal distance between function values , there will always be a , such that and (after being mapped) are closer together than , whenever is closer to than .

For continuous functions, we can control the error to be lower than by keeping the error in the argument sufficiently small (smaller than ). Finding a means answering the question: How low does my initial error in the argument have to be in order to get a final error smaller than . This may get interesting when doing numerical calculations or measurements. Imagine, you are measuring some and then using it to compute where is a continuous function. The epsilon-delta criterion allows you to find the maximal error in (i.e. ), which guarantees that the final error will be smaller than .

A may only be found if small changes around the argument also cause small changes around the function value . Hence, concerning functions continuous at , there has to be:

I.e.: whenever is sufficiently close to , then is approximately . This may also be described using the notion of an -neighborhood:

For every -neighborhood around - no matter how small it may be - there is always a -neighborhood around , whose function values are all mapped into the -neighborhood.

In topology, this description using neighborhoods will be generalized to a topological definition of continuity.

Visualization of the Epsilon-Delta criterion

[Bearbeiten]Description of continuity using the graph

[Bearbeiten]The epsilon-delta criterion may nicely be visualized by taking a look at the graph of a funtion. Let's start by getting a picture of the implication . This means, the distance between and is smaller than epsilon, whenever is closer to than . So for , there is . Hence, the point has to be inside the rectangle . This is a rectangle with width and height centered at :

We will call this the --rectangle and only consider its interior. That means, the boundary does not belong to the rectangle. Following the epsilon-delta criterion, the implication has to be fulfilled for all arguments . Thus, all points making up the graph of restricted to arguments inside the interval (in the interior of the --rectangle, which is marked green) must never be above or below the rectangle (the red area):

So graphically, we may describe the epsilon-delta criterion as follows:

For all rectangle heights , there is a sufficiently small rectangle width , such that the graph of restricted to (i.e. the width of the rectangle) is entirely inside the green interior of the --rectangle, and never in the red above or below area.

Example of a continuous function

[Bearbeiten]For an example, consider the function . This fucntion is continuous everywhere - and hence also at the argument . There is . At first, consider a maximal final error of around . With , we can find a , such that the graph of is entirely situated inside the interior of the --rectangle:

But not only for , but for any we may find a , such that the graph of is situated entirely inside the respective --rectangle:

-

For , one can choose and the graph is in the interior of the --rectangle.

-

In case , the width will be small enough to get the graph into the --rectangle.

Example for a discontinuous function

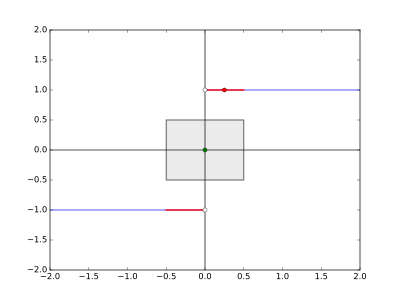

[Bearbeiten]What happens if the function is discontinuous? Let's take the signum function , which is discontinuous at 0:

And here is its graph:

The graph intuitively allows to recognize that at , there certainly is a discontinuity. And we may see this using the rectangle visualization, as well. When choosing a rectangle height , smaller than the jump height (i.e. ), then there is no , such that the graph can be fitted entirely inside the --rectangle. For instance if , then for any - no matter how small - there will always be function values above or below the --rectangle. In fact, this apples to all values except for :

-

For and , the signum function has values above or below the --rectangle (colored in red).

-

For we will find points in the graph above or below the --rectangle, as well.

Dependence of delta or epsilon choice

[Bearbeiten]Continuity

[Bearbeiten]How does the choice of depend on and ? Suppose, an arbitrary is given in order to check continuity of . Now, we need to find a rectangle width , such that the restriction of the graph of to arguments inside the interval entirely fits into the epsilon-tube . This of course requires choosing sufficiently small. When is too large, there may be an argument in , where has escaped the tube, i.e. it has a distance to larger than :

-

If for a given , the respective is chosen too large, then there may be function values above or below the --rectangle (marked red, here).

-

By contrast, if is re-scaled to be sufficiently small, the graph entirely fits into the --rectangle.

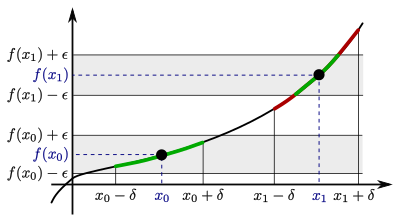

How small has to be chosen, will depend on three factors: The function , the given and the argument . Depending on the function slope, a different chosen (steep functions require a smaller ). Furthermore, for a smaller we also have to choose a smaller . The following diagrams illustrate this: Here, a quadratic function is plotted, which is continuous at . For a smaller , we also need to choose a smaller :

The choice of will depend on the argument , as well. The more a function changes in the neighborhood of a certain point (i.e. it is steep around it), the smaller we have to choose . The following graphic demonstrates this: The -value proposed there is sufficiently small at , but too large at :

In the vicinity of , the function has a higher slope compared to . Hence, we need to choose a smaller at . Let us denote the -values at and correspondingly by and - and choose to be smaller:

So, we have just seen that the choice of depends on the function to be considered, as well as the argument and the given .

Discontinuity

[Bearbeiten]For a discontinuity proof, the relations between the variables will interchange. This relates back to the interchange of the quantifiers under negation of propositions. In order to show discontinuity, we need to find an small enough, such that for no the graph of fits entirely into the --rectangle. In particular, if the discontinuity is caused by a jump, then must be chosen smaller than the jump height. For too large, there might be a , such that does fit into the --rectangle:

-

Choosing too lage for the signum function, we get a , such that the graph entirely fits into the --rectangle.

-

If is chosen small enough, then for any there will be function values above or below the --retangle.

Which has to be chosen again depends on the function around . After has been chosen, an arbitrary will be considered. Then, an between and has to be found, such that has a distance larger than (or equal to) to . That means, the point has to be situated above or below the --rectangle. Which has to be chosen depends on a varety of parameters: the chosen and the arbitrarily given , the discontinuity and the behavior of the function around it.

Example problems

[Bearbeiten]Continuity

[Bearbeiten]Exercise (Continuity of a linear function)

Prove that a linear function with is continuous.

How to get to the proof? (Continuity of a linear function)

To actually prove continuity of , we need to check continuity at any argument . So let be an arbitrary real number. Now, choose any arbitrary maximal error . Our task is now to find a sufficiently small , such that for all arguments with . Let us take a closer look at the inequality :

That means, has to be fulfilled for all with . How to choose , such that implies ?

We use that the inequality contains the distance . As we know that this distance is smaller than . This can be plugged into the inequality :

If is now chosen such that , then will yield the inequality which we wanted to show. The smallness condition for can now simply be found by resolving for :

Any satisfying could be used for the proof. For instance, we may use . As we now found a suitable , we can finally conduct the proof:

Proof (Continuity of a linear function)

Let with and let be arbitrary. In addition, consider any to be given. We choose . Let with . There is:

This shows , and establishes continuity of at by means of the epsilon-delta criterion. Since was chosen to be arbitrary, we also know that the entire function is continuous.

Discontinuity

[Bearbeiten]Exercise (Discontinuity of the signum function)

Prove that the signum function is is discontinuous:

How to get to the proof? (Discontinuity of the signum function)

In order to prove discontinuity of the entire function, we just have to find one single argument where it is discontinuous. Considering the graph of , we can already guess, which argument this may be:

The function has a jump at . So we expect it to be discontinuous, there. It remains to choose an that makes it impossible to find a , that makes the function fit into the --rectangle. This is done by setting smaller than the jump height - for instance . For that , no matter how is given, there will be function values above or below the --rectangle.

So let be arbitrary. We need to show that there is an with but . Let us take a look at the inequality :

This inequality classifies all that can be used for the proof. The particular we choose has to fulfill :

So our needs to fulfill both and . The second inequality may be achieved quite easily: For any , the value is either or . So does always fulfill .

Now we need to fulfill the first inequality . From the second inequality, we have just concluded . This is particularly true for all with . Therefore, we choos to be somewhere between and , for instance .

The following figure shows that this is a sensible choice. The --rectangle with and is drawn here. All points above or below that rectangle are marked red. These are exactly all inside the interval excluding . Our chosen (red dot) is situated directly in the middle of the red part of the graph above the rectangle:

So choosing is enough to complete the proof:

Proof (Discontinuity of the signum function)

We set (this is where is discontinuous). In addition, we choose . Let be arbitrary. For that given , we choose . Now, on one hand there is:

But on the other hand:

So indeed, is discontinuous at . Hence, the function is discontinuous itself.

Relation to the sequence criterion

[Bearbeiten]Now, we have two definitions of continuity: the epsilon-delta and the sequence criterion. In order to show that both definitions describe the same concept, we have to prove their equivalence. If the sequence criterion is fulfilled, it must imply that the epsilon-delta criterion holds and vice versa.

Epsilon-delta criterion implies sequence criterion

[Bearbeiten]Theorem (The epsilon-delta criterion implies the sequence criterion)

Let with be any function. If this function satisfies the epsilon-dela criterion at , then the sequence criterion is fulfilled at , as well.

How to get to the proof? (The epsilon-delta criterion implies the sequence criterion)

Let us assume that the function satisfies the epsilon-delta criterion at . That means:

For every , there is a such that for all with .

We now want to prove that the sequence criterion is satisfied, as well. So we have to show that for any sequence of arguments converging to , there also has to be . We therefor consider an arbitrary sequence of arguments in the domain with . Our job is to show that the sequence of function values converges to . So by the definition of convergence:

For any there has to be an such that for all .

Let be arbitrary. We have to find a suitable with for all sequence elements beyond that , i.e. . The inequality seems familiar, recalling the epsilon-delta criterion. The only difference is that the argument is replaced by a sequence element - so we consider a special case for . Let us apply the epsilon-delta criterion to that special case, with our arbitrarily chosen being given:

There is a , such that for all sequence elements fulfilling .

Our goal is coming closer. Whenever a sequence element is close to with , it will satisfy the inequality which we want to show, namely . It remains to choose an , where this is the case for all sequence elements beyond . The convergence implies that gets arbitrarily small. So by the definition of continuity, we may find an , with for all . This now plays the role of our . If there is , it follows that and hence by the epsilon-delta criterion. In fact, any will do the job. We now conclude our considerations and write down the proof:

Proof (The epsilon-delta criterion implies the sequence criterion)

Let e a function satisfying the epsilon-delta criterion at . Let be a sequence inside the domain of definition, i.e. for all coverging as . We would like to show that for any given there exists an , such that holds for all .

So let be given. Following the epsilon-delta criterion, there is a , with for all close to , i.e. . As converges to , we may find an with for all .

Now, let be arbitrary. Hence, . The epsilon-delta criterion now implies . This proves and therefore establishes the epsilon-delta criterion.

Sequence criterion implies epsilon-delta criterion

[Bearbeiten]Theorem (The sequence criterion implies the epsilon-delta criterion)

Let with be a function. If satisfies the sequence criterion at , then the epsilon-delta criterion is fulfilled there, as well.

How to get to the proof? (The sequence criterion implies the epsilon-delta criterion)

We need to show that the following implication holds:

This time, we do not show the implication directly, but using a contraposition. So we will prove the following implication (which is equivalent to the first one):

Or in other words:

So let be a function that violates the epsilon-delta criterion at . Hence, fulfills the discontinuity version of the epsilon-delta criterion at . We can find an ,such that for any there is a with but . It is our job now to prove, that the sequence criterion is violated, as well. This requires choosing a sequence of aguments , converging as but .

This choice will be done exploiting the discontinuity version of the epsilon-delta criterion. That version provides us with an , where holds (so continuity is violated) for certain arguments . We will now construct our sequence exclusively out of those certain . This will automatically get us .

So how to find a suitable sequence of arguments , converging to ? The answer is: by choosing a null sequence . Practically, this is done as follows: we set . For any , we take one of the certain for as our argument . Then, but also . These make up the desired sequence . On one hand, there is and as , the convergence holds. But on the other hand , so the sequence of function values does not converge to . Let us put these thoughts together in a single proof:

Proof (The sequence criterion implies the epsilon-delta criterion)

We establish the theorem by contraposition. It needs to be shown that a function violating the epsilon-delta criterion at also violates the sequence criterion at . So let with be a function violating the epsilon-delta criterion at . Hence, there is an , such that for all an exists with but .

So for any , there is an with but . The inequality can also be written . As , there is both and . Thus, by the sandwich theorem, the sequence converges to .

But since for all , the sequence can not converge to . Therefore, the sequence criterion is violated at for the function : We have found a sequence of arguments with but .

Exercises

[Bearbeiten]Quadratic function

[Bearbeiten]Exercise (Continuity of the quadratic function)

Prove that the function with is continuous.

How to get to the proof? (Continuity of the quadratic function)

For this proof, we need to show that the square function is continuous at any argument . Using the proof structure for the epsilon-delta criterion, we are given an arbitrary . Our job is to find a suitable , such that the inequality holds for all .

In order to find a suitable , we plug in the definition of the function into the expression which shall be smaller than :

The expression may easily be controlled by . Hence, it makes sense to construct an upper estimate for which includes and a constant. The factor appears if we perform a factorization using the third binomial formula:

The requirement allows for an upper estimate of our expression:

The we are looking for may only depend on and . So the dependence on in the factor is still a problem. We resolve it by making a further upper estimate for the factor . We will use a simple, but widely applied "trick" for that: A is subtracted and then added again at another place (so we are effectively adding a 0) , such that the expression appears:

The absolute is obtained using the triangle inequality. This absolute is again bounded from above by :

So reshaping expressions and applying estimates, we obtain:

With this inequality in hand, we are almost done. If is chosen in a way that , we will get the final inequality . This is practically found solving the quadratic equation for . Or even simpler, we may estimate from above. We use that we may freely impose any condition on . If we, for instance, set , then which simplifies things:

So will also do the job. This inequality can be solved for to get the second condition on (the first one was ):

So any fulfilling both conditions does the job: and have to hold. Ind indeed, both are true for . This choice will be included into the final proof:

Proof (Continuity of the quadratic function)

Let be arbitrary and . If an argument fulfills then:

This shows that the square function is continuous by the epsilon-delta criterion.

Concatenated absolute function

[Bearbeiten]Exercise (Example for a proof of continuity)

Prove that the following function is continuous at :

How to get to the proof? (Example for a proof of continuity)

We need to show that for each given , there is a , such that for all with the inequality holds. In our case, . So by choosing for small enough, we may control the expression . First, let us plug into in order to simplify the inequality to be shown :

The objective is to "produce" as many expressions as possible, since we can control . It requires some experience with epsilon-delta proofs in order to "directly see" how this is achieved. First, we need to get rid of the double absolute. This is done using the inequality . For instance, we could use the following estimate:

However, this is a bad estimate as the expression no longer tends to 0 as . To resolve this problem, we use before applying the inequality :

A factor of can be directly extracted out of this with the third binomial fomula:

And we can control it by :

Now, the required must only depend on and . Therefore, we have to get rid of the -dependence of . This can be done by finding an upper bound for which does not depend on . As we are free to chose any for our proof, we may also impose any condition to it which helps us with the upper bound. In this case, turns out to be quite useful. In fact, or an even higher bound would do this job, as well. What follows from this choice?

As before, there has to be . As , we now have and as , we obtain and . This is the upper bound we were looking for:

As we would like to show , we set . And get that our final inequality holds:

So if the two conditions for are satisfied, we get the final inequality. In fact, both conditions will be satisfied if , concluding the proof. So let's conclude our ideas and write them down in a proof:

Proof (Example for a proof of continuity)

Let be arbitrary and let . Further, let with . Then:

Step 1:

As , there is . Hence and . It follows that and therefore .

Step 2:

Hence, the function is continuous at .

Hyperbola

[Bearbeiten]Exercise (Continuity of the hyperbolic function)

Prove that the function with is continuous.

How to get to the proof? (Continuity of the hyperbolic function)

The basic pattern for epsilon-delta proofs is applied here, as well. We would like to show the implication . Forst, let us plug in what we know and reshape our terms a bit until a appears:

By assumption, there will be , of which we can make use:

The choice of may again only depend on and so we need a smart estimate for in order to get rid of the -dependence. To do so, we consider .

Why was and not chosen? The explanation is quite simple: We need a -neighborhood inside the domain of definition of . If we had simply chosen , we might have get kicked out of this domain. For instance, in case, the following problem appears:

The biggest -value with is and the smallest one is . However, is not inside the domain of definition as . In particular, is element of that interval, where cannot be defined at all.

A smarter choice for , such that the -neighborhood doesn't touch the -axis is half of the distance to it, i.e. . A third of this distance or other fractions smaller than 1 would also be possible: , or .

As we chose and by , there is . This allows for an upper bound: and we may write:

So we get the estimate:

Now, we want to prove . Hence we choose . Plugging this in, our final inequality will be fulfilled.

So again, we imposed two conditions for : and . Both are fulfilled by , which we will use in the proof:

Proof (Continuity of the hyperbolic function)

Let with and let be arbitrary. Further, let be arbitrary. We choose . For all with there is:

Hence, the function is continuous at . And as was chosen to be arbitrary, the whole function is continuous.

Concatenated square root function

[Bearbeiten]Exercise (Epsilon-Delta proof for the continuity of the Square Root Function)

Show, using the epsilon-delta criterion, that the following function is continuous:

How to get to the proof? (Epsilon-Delta proof for the continuity of the Square Root Function)

We need to show, that for any given , there is a , such that all with satisfy the inequality . So let us take a look at the target inequality and estimate the absolute from above. We are able to control the term . Therefor, would like to get an upper bound for including the expression . So we are looking for an inequality of the form

Here, is some expression depending on and . The second factor is smaller than and can be made arbitrarily small by a suitable choice of . Such a bound is constructed as follows:

Since , there is:

If we now choose small enough, such that , then we obtain our target inequality . But still depends on , so would have to depend on , too - and we required one choice of which is suitable for all . THerefore, we need to get rid of the -dependence. This is done by an estimate of the first factor, such that our inequality takes the form :

We even made independent of , which would in fact not have been necessary. So we obtain the following inequality

We need the estimate , in order to fulfill the target inequality . The choice of is sufficient for that. So let us write down the proof:

Proof (Epsilon-Delta proof for the continuity of the Square Root Function)

Let with . Let and an arbitrary be given. We choose . For all with there is:

Hence, is a continuous function.

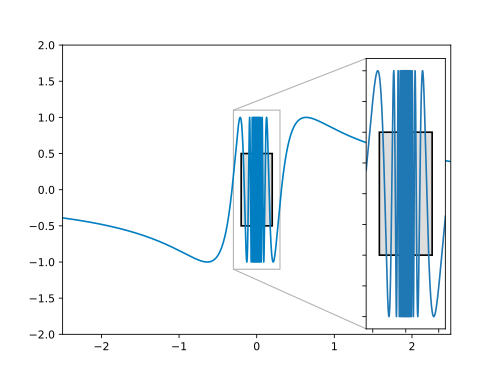

Discontinuity of the topological sine function

[Bearbeiten]Exercise (Discontinuity of the topological sine fucntion)

Prove the discontinuity at for the topological sine function:

How to get to the proof? (Discontinuity of the topological sine fucntion)

In this exercise, discontinuity has to be shown for a given function. This is done by the negation of the epsilon-delta criterion. Our objective is to find both an and an , such that and . Here, may be chosen depending on , while has to be the same for all . For a solution, we may proceed as follows

Step 1: Simplify the target inequality

First, we may simplify the two inequalities which have to be fulfilled by plugging in and . We therefore get: and .

Step 2: Choose a suitable

We consider the graph of the function . It will help us finding the building bricks for our proof:

We need to find an , such that there are arguments in each arbitrarily narrow interval whose function values have a distance larger than from . Visually, no matter how small the width of the --rectangle is chosen, there will always be some points below or above it.

Taking a look at the graph, we see that our function oscillates between and . Hence, may be useful. In that case, there will be function values with in every arbitrarily small neighborhood around . We choose . This is visualized in the following figure:

After has been chosen, an arbitrary will be assumed, for which we need to find a suitable . This is what we will do next.

Step 3: Choice of a suitable

We just set . Therefore, has to hold. So it would be nice to choose an with . Now, is obtained for any with . The condition for such that the function gets 1 is therefore:

So we found several , where . Now we only need to select one among them, which satisfies for the given . Our depend on . So we have to select a suitable , where . To do so, let us plug into this inequality and solve it for :

So the condition on is . If we choose just any natural number above this threshold , then will be fulfilled. Such a has to exist by Archimedes' axiom (for instance by flooring up the right-hand expression). So let us choose such a and define via . This gives us both and . So we got all building bricks together, which we will now assemble to a final proof:

Proof (Discontinuity of the topological sine fucntion)

Choose and let be arbitrary. Choose a natural number with . Such a natural number has to exist by Archimedes' axiom. Further, let . Then:

In addition:

Hence, the function is discontinuous at .

![{\displaystyle {\begin{aligned}{\begin{array}{l}\underbrace {{\underset {}{}}\forall \epsilon >0} _{{\text{For all }}\epsilon >0}\underbrace {{\underset {}{}}\exists \delta >0} _{{\text{ there is a }}\delta >0}\underbrace {{\underset {}{}}\forall x\in D} _{{\text{, such that for all }}x\in D}\\[1em]\quad \underbrace {{\underset {}{}}|x-x_{0}|<\delta } _{{\text{ with distance to }}x_{0}{\text{ smaller than }}\delta }\underbrace {{\underset {}{}}\implies } _{\text{ there is}}\underbrace {{\underset {}{}}|f(x)-f(x_{0})|<\epsilon } _{{\text{, such that the distance of }}f(x){\text{ to }}f(x_{0}){\text{ is smaller than }}\epsilon }\end{array}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/aa3ced2047c2204f5a05bdc9ee30b44a217fd880)

![{\displaystyle {\begin{aligned}{\begin{array}{rrrrrcr}&\neg {\Big (}\forall \epsilon >0\,&\exists \delta >0\,&\forall x\in D:&|x-x_{0}|<\delta &\implies &|f(x)-f(x_{0})|<\epsilon {\Big )}\\[0.5em]\iff &\exists \epsilon >0\,&\neg {\Big (}\exists \delta >0\,&\forall x\in D:&|x-x_{0}|<\delta &\implies &|f(x)-f(x_{0})|<\epsilon {\Big )}\\[0.5em]\iff &\exists \epsilon >0\,&\forall \delta >0\,&\neg {\Big (}\forall x\in D:&|x-x_{0}|<\delta &\implies &|f(x)-f(x_{0})|<\epsilon {\Big )}\\[0.5em]\iff &\exists \epsilon >0\,&\forall \delta >0\,&\exists x\in D:&\neg {\Big (}|x-x_{0}|<\delta &\implies &|f(x)-f(x_{0})|<\epsilon {\Big )}\\[0.5em]\iff &\exists \epsilon >0\,&\forall \delta >0\,&\exists x\in D:&|x-x_{0}|<\delta &\land &\neg {\Big (}|f(x)-f(x_{0})|<\epsilon {\Big )}\\[0.5em]\iff &\exists \epsilon >0\,&\forall \delta >0\,&\exists x\in D:&|x-x_{0}|<\delta &\land &|f(x)-f(x_{0})|\geq \epsilon \end{array}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a4575e200c1888fae248ce7a97ec488df4013fd9)

![{\displaystyle {\begin{aligned}{\begin{array}{l}\underbrace {{\underset {}{}}\exists \epsilon >0} _{{\text{There is a }}\epsilon >0,}\underbrace {{\underset {}{}}\forall \delta >0} _{{\text{ such that for all }}\delta >0}\underbrace {{\underset {}{}}\exists x\in D} _{{\text{ there is an }}x\in D}\\[1em]\quad \underbrace {{\underset {}{}}|x-x_{0}|<\delta } _{{\text{ with distance to }}x_{0}{\text{ smaller than }}\delta }\underbrace {{\underset {}{}}\land } _{\text{ and}}\underbrace {{\underset {}{}}|f(x)-f(x_{0})|\geq \epsilon } _{{\text{the distance of }}f(x){\text{ to }}f(x_{0}){\text{ is bigger (or equal) }}\epsilon }\end{array}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/51116b7da7810e0d171c243b10d962e932d8757f)

![{\displaystyle {\begin{aligned}{\begin{array}{rrl}&|f(x)-f(x_{0})|&<\epsilon \\[0.5em]\iff &\left|{\frac {1}{3}}x-{\frac {1}{3}}x_{0}\right|&<\epsilon \\[0.5em]\iff &\left|{\frac {1}{3}}(x-x_{0})\right|&<\epsilon \\[0.5em]\iff &{\frac {1}{3}}|x-x_{0}|&<\epsilon \end{array}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5d31310b5d997a542e2d16535a112538e5d5bd35)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\left|{\frac {1}{3}}x-{\frac {1}{3}}x_{0}\right|\\[0.5em]&=\left|{\frac {1}{3}}(x-x_{0})\right|\\[0.5em]&={\frac {1}{3}}|x-x_{0}|\\[0.5em]&\quad {\color {Gray}\left\downarrow \ |x-x_{0}|<\delta \right.}\\[0.5em]&<{\frac {1}{3}}\delta \\[0.5em]&\quad {\color {Gray}\left\downarrow \ \delta =3\epsilon \right.}\\[0.5em]&\leq {\frac {1}{3}}(3\epsilon )=\epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4cc60cea8cdcb2598454593c6b521c7516e7f89c)

![{\displaystyle {\begin{aligned}{\begin{array}{rrl}&|f(x)-f(x_{0})|&\geq \epsilon \\[0.5em]\iff &|\operatorname {sgn}(x)-\operatorname {sgn}(x_{0})|&\geq {\frac {1}{2}}\\[0.5em]\iff &|\operatorname {sgn}(x)-\operatorname {sgn}(0)|&\geq {\frac {1}{2}}\\[0.5em]\iff &|\operatorname {sgn}(x)|&\geq {\frac {1}{2}}\end{array}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bcc44b40d90ac63c780e7d0dc1f8f767d68457c2)

![{\displaystyle {\begin{aligned}{\begin{array}{rrl}&{\frac {1}{2}}&<1\\[0.5em]\implies &{\frac {1}{2}}\delta &<\delta \\[0.5em]\implies &\left|{\frac {1}{2}}\delta \right|&<\delta \\[0.5em]\implies &\left|{\frac {1}{2}}\delta -0\right|&<\delta \\[0.5em]\implies &|x-x_{0}|&<\delta \end{array}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/57e7f87669f9fd77335e50cfd861641e94a2002b)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=|\operatorname {sgn}(x)-\operatorname {sgn}(x_{0})|\\[0.5em]&=\left|\operatorname {sgn} \left({\frac {\delta }{2}}\right)-\operatorname {sgn}(0)\right|\\[0.5em]&=\left|1-0\right|\\[0.5em]&=1\\[0.5em]&\geq {\frac {1}{2}}=\epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e3167c8c11ff695bed71173f7068a3da353af4e5)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=|x^{2}-x_{0}^{2}|\\[0.5em]&=|x+x_{0}||x-x_{0}|\\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ |x-x_{0}|<\delta \right.}\\[0.5em]&<|x+x_{0}|\cdot \delta \\[0.5em]&=|x\underbrace {-x_{0}+x_{0}} _{=\ 0}+x_{0}|\cdot \delta \\[0.5em]&=|x-x_{0}+2x_{0}|\cdot \delta \\[0.5em]&\leq (|x-x_{0}|+2|x_{0}|)\cdot \delta \\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ |x-x_{0}|<\delta \right.}\\[0.5em]&<(\delta +2|x_{0}|)\cdot \delta \\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ \delta \leq 1\right.}\\[0.5em]&\leq (1+2|x_{0}|)\cdot \delta \\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ \delta ={\frac {\epsilon }{1+2|x_{0}|}}\right.}\\[0.5em]&=(1+2|x_{0}|)\cdot {\frac {\epsilon }{1+2|x_{0}|}}\\[0.5em]&=\epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ebb3bb75ca3552607bc833f51fcbb75f3d72b660)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=|f(x)-f(1)|\\[0.5em]&=|5\cdot |x^{2}-2|+3-(5|1^{2}-2|+3)|\\[0.5em]&=|5\cdot |x^{2}-2|-5|\\[0.5em]&=5\cdot ||x^{2}-2|-1|\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/69d266482610f10dbbb503cde4f016731c087395)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots \\[0.3em]&=5\cdot ||x^{2}-2|-1|\\[0.3em]&=5\cdot ||x^{2}-2|-|1||\\[0.3em]&\ {\color {Gray}\left\downarrow \ ||a|-|b||\leq |a-b|\right.}\\[0.3em]&\leq 5\cdot |x^{2}-2-1|\\[0.3em]&=5\cdot |x^{2}-3|\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ad49ec4121be7ebbb9c70668503e553b2e8f40a8)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots \\[0.3em]&=5\cdot |x^{2}-2|-1|\\[0.3em]&=5\cdot |x^{2}-2|-|-1||\\[0.3em]&\quad {\color {Gray}\left\downarrow \ |a-b|\geq ||a|-|b||\right.}\\[0.3em]&\leq 5\cdot |x^{2}-2-(-1)|\\[0.3em]&=5\cdot |x^{2}-1|\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0b95d37135edaa1cb43baadd154883fd2cbbaecf)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots \\[0.5em]&\leq 5\cdot |x^{2}-1|\\[0.5em]&=5\cdot |x+1||x-1|\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/354cef939486c44d804e4332f3c9b82b2167b60c)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots \\[0.5em]&\leq 5\cdot |x^{2}-1|\\[0.5em]&=5\cdot |x+1||x-1|\\[0.5em]&<5\cdot |x+1|\cdot \delta \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ae97a41cdc436eb91a8d1f524c1c5b05f7aaa7bf)

![{\displaystyle x\in [1-1;1+1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/efd6f0b16fff92863add316a12def2b473ce7ef6)

![{\displaystyle 1+x\in [1;3]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0e1e41ddeb905578af9d8e17d3bd454394f356a3)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots \\[0.5em]&<5\cdot |x+1|\cdot \delta \\[0.5em]&\quad {\color {Gray}\left\downarrow \ |x+1|\leq 3\right.}\\[0.5em]&\leq 15\cdot \delta \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/da02b2abe01990a7c8869caea17519cab0ed54cc)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots \\[0.5em]&<15\cdot \delta \\[0.5em]&\leq 15\cdot {\frac {\epsilon }{15}}\\[0.5em]&\leq \epsilon .\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/84d0b6afce1242e6ac5d9dc35dc5c1b094bd2f52)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=|f(x)-f(1)|\\[0.5em]&=|5\cdot |x^{2}-2|+3-(5|1^{2}-2|+3)|\\[0.5em]&=|5\cdot |x^{2}-2|-5|\\[0.5em]&=5\cdot ||x^{2}-2|-1|\\[0.5em]&\quad {\color {Gray}\left\downarrow \ 1\iff |-1|\right.}\\[0.5em]&=5\cdot ||x^{2}-2|-|-1||\\[0.5em]&\quad {\color {Gray}\left\downarrow \ |a-b|\geq ||a|-|b||\right.}\\[0.5em]&\leq 5\cdot |x^{2}-2-(-1)|\\[0.5em]&=5\cdot |x^{2}-1|\\[0.5em]&\quad {\color {Gray}\left\downarrow \ a^{2}-b^{2}=(a+b)(a-b)\right.}\\[0.5em]&=5\cdot (|x+1|\cdot \underbrace {|x-1|} _{<\delta })\\[0.5em]&<5\cdot |x+1|\cdot \delta \\[0.5em]&\quad {\color {Gray}\left\downarrow \ |x+1|\leq 3\right.}\\[0.5em]&\leq 15\delta \\[0.5em]&\quad {\color {Gray}\left\downarrow \ \delta ={\frac {\epsilon }{15}}\right.}\\[0.5em]&=15\cdot {\frac {\epsilon }{15}}\\[0.5em]&\leq \epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0769fa7ba63b17f3e4d3833d6f553429e1eb3372)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\left|{\frac {1}{x}}-{\frac {1}{x_{0}}}\right|\\[0.5em]&=\left|{\frac {x-x_{0}}{x\cdot x_{0}}}\right|\\[0.5em]&={\frac {|x-x_{0}|}{|x||x_{0}|}}\\[0.5em]&={\frac {1}{|x||x_{0}|}}\cdot |x-x_{0}|\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/01ba499ec4117d2c9c4aaecaf73508b9e7e25786)

![{\displaystyle x\in \left[{\tfrac {x_{0}}{2}};{\tfrac {3x_{0}}{2}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d47a3aef04163c30d6cf6b0d2e661e3d0ca39a79)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\ldots <{\frac {1}{|x||x_{0}|}}\cdot \delta \\[0.5em]&\quad {\color {Gray}\left\downarrow \ \delta ={\frac {\epsilon \cdot |x_{0}|^{2}}{2}}\right.}\\[0.5em]&\leq {\frac {2}{|x_{0}|^{2}}}\cdot \delta \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/97179ce859e8df4cffeebc20e36e4fff137f8bbf)

![{\displaystyle {\begin{aligned}|f(x)-f(x_{0})|&=\left|{\frac {1}{x}}-{\frac {1}{x_{0}}}\right|\\[0.5em]&=\left|{\frac {x-x_{0}}{x\cdot x_{0}}}\right|\\[0.5em]&={\frac {|x-x_{0}|}{|x||x_{0}|}}\\[0.5em]&={\frac {1}{|x||x_{0}|}}|x-x_{0}|\\[0.5em]&\quad {\color {Gray}\left\downarrow \ {\frac {1}{|x|}}\leq {\frac {2}{|x_{0}|}}\right.}\\[0.5em]&\leq {\frac {2}{|x_{0}|^{2}}}|x-x_{0}|\\[0.5em]&\quad {\color {Gray}\left\downarrow \ |x-x_{0}|<\delta \right.}\\[0.5em]&<{\frac {2\delta }{|x_{0}|^{2}}}\\[0.5em]&\quad {\color {Gray}\left\downarrow \ \delta ={\frac {\epsilon \cdot |x_{0}|^{2}}{2}}\right.}\\[0.5em]&={\frac {2}{|x_{0}|^{2}}}\cdot {\frac {\epsilon \cdot |x_{0}|^{2}}{2}}\\[0.5em]&=\epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ff8bd751a75cee3af77911fbab6f8d4abb0b9058)

![{\displaystyle {\begin{aligned}|f(x)-f(a)|&=\left|{\sqrt {5+x^{2}}}-{\sqrt {5+a^{2}}}\right|\\[0.3em]&\quad {\color {Gray}\left\downarrow \ {\text{expand with}}\left|{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}\right|\right.}\\[0.3em]&={\frac {\left|{\sqrt {5+x^{2}}}-{\sqrt {5+a^{2}}}\right|\cdot \left|{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}\right|}{\left|{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}\right|}}\\[0.3em]&\quad {\color {Gray}\left\downarrow \ {\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}\geq 0\right.}\\[0.3em]&={\frac {\left|x^{2}-a^{2}\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\\[0.3em]&={\frac {\left|x+a\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\cdot |x-a|\\[0.3em]&\quad {\color {Gray}\left\downarrow \ K(x,a):={\frac {\left|x+a\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\right.}\\[0.3em]&=K(x,a)\cdot |x-a|\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7fd151e0f901ce664e4e622e2ead380c1b77aa08)

![{\displaystyle {\begin{aligned}K(x,a)&={\frac {\left|x+a\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\\[0.3em]&\quad {\color {Gray}\left\downarrow \ {\text{triangle inequality}}\right.}\\[0.3em]&\leq {\frac {\left|x\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}+{\frac {\left|a\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\\[0.3em]&\quad {\color {Gray}\left\downarrow \ {\frac {a}{b+c}}\leq {\frac {a}{b}}{\text{ for }}a,c\geq 0,b>0\right.}\\[0.3em]&\leq {\frac {\left|x\right|}{\sqrt {5+x^{2}}}}+{\frac {\left|a\right|}{\sqrt {5+a^{2}}}}\\[0.3em]&\quad {\color {Gray}\left\downarrow \ {\frac {|a|}{\sqrt {5+a^{2}}}}\leq 1{\text{, since }}{\sqrt {5+a^{2}}}\geq {\sqrt {a^{2}}}=|a|\right.}\\[0.3em]&\leq 2=:{\tilde {K}}(a)\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fb1a15225e7caed47a4e11e56b9343fcbf2c6960)

![{\displaystyle {\begin{aligned}|f(x)-f(a)|&=\left|{\sqrt {5+x^{2}}}-{\sqrt {5+a^{2}}}\right|\\[0.3em]&={\frac {\left|{\sqrt {5+x^{2}}}-{\sqrt {5+a^{2}}}\right|\cdot \left|{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}\right|}{\left|{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}\right|}}\\[0.3em]&={\frac {\left|x^{2}-a^{2}\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\\[0.3em]&={\frac {\left|x+a\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\cdot |x-a|\\[0.3em]&\leq \left({\frac {\left|x\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}+{\frac {\left|a\right|}{{\sqrt {5+x^{2}}}+{\sqrt {5+a^{2}}}}}\right)\cdot |x-a|\\[0.3em]&\leq \left({\frac {\left|x\right|}{\sqrt {5+x^{2}}}}+{\frac {\left|a\right|}{\sqrt {5+a^{2}}}}\right)\cdot |x-a|\\[0.3em]&\leq (1+1)\cdot |x-a|\\[0.3em]&\leq 2\cdot |x-a|\\[0.3em]&\quad {\color {Gray}\left\downarrow \ |x-a|<\delta ={\frac {\epsilon }{2}}\right.}\\[0.3em]&<2\cdot {\frac {\epsilon }{2}}=\epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/12cd8aa90f8a0c4e92c8ba9955b4a65297f2b797)

![{\displaystyle {\begin{aligned}\left|x\right|<\delta &\iff \left|{\frac {1}{{\frac {\pi }{2}}+2k\pi }}\right|<\delta \\[0.5em]&\iff {\frac {1}{{\frac {\pi }{2}}+2k\pi }}<\delta \\[0.5em]&\iff 2k\pi +{\frac {\pi }{2}}>{\frac {1}{\delta }}\\[0.5em]&\iff 2k\pi >{\frac {1}{\delta }}-{\frac {\pi }{2}}\\[0.5em]&\iff k>{\frac {1}{2\pi }}\left({\frac {1}{\delta }}-{\frac {\pi }{2}}\right)\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/25af20f89560c07b3820129dd8d10ce2b902d7e9)

![{\displaystyle {\begin{aligned}k>{\frac {1}{2\pi }}\left({\frac {1}{\delta }}-{\frac {\pi }{2}}\right)&\implies 2k\pi >{\frac {1}{\delta }}-{\frac {\pi }{2}}\\[0.5em]&\implies 2k\pi +{\frac {\pi }{2}}>{\frac {1}{\delta }}\\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ {\frac {1}{a}}>{\frac {1}{b}}>0\iff 0<a<b\right.}\\[0.5em]&\implies {\frac {1}{{\frac {\pi }{2}}+2k\pi }}<\delta \\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ {\frac {1}{{\frac {\pi }{2}}+2k\pi }}>0\right.}\\[0.5em]&\implies \left|{\frac {1}{{\frac {\pi }{2}}+2k\pi }}\right|<\delta \\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ x={\frac {1}{{\frac {\pi }{2}}+2k\pi }}\right.}\\[0.5em]&\implies \left|x\right|<\delta \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ad7985a4bd1699ae43466813ef6cb9d56079acd3)

![{\displaystyle {\begin{aligned}\left|f(x)-f(0)\right|&=\left|\sin \left({\frac {1}{x}}\right)-0\right|\\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ x={\frac {1}{{\frac {\pi }{2}}+2k\pi }}\right.}\\[0.5em]&=\left|\sin \left({\frac {\pi }{2}}+2k\pi \right)\right|\\[0.5em]&\quad {\color {OliveGreen}\left\downarrow \ \sin \left({\frac {\pi }{2}}+2k\pi \right)=1\right.}\\[0.5em]&=\left|1\right|\geq {\frac {1}{2}}=\epsilon \end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/3fa308896735c40f86148e2d6dfd09fa66590f03)